That said, you can use the Python function eval() to turn an escaped string into a string: > x = eval("'Capit\\xc3\\xa1nĪs you can see, the string "\xc3" has been turned into a single character. In your editor, you must not type the escaped display string but what the string means (in this case, you must enter the umlaut and save the file). In order to display Unicode or anything >= charcode 128, it must use some means of escaping. You must understand that your console can only display ASCII. This is explained perfectly in the comment by heikogerlach. The next issue is the representation in Python. But since most OSs predate the Unicode era, they don't have suitable tools to attach the encoding information to files on the hard disk. The point of UTF-8 is to be able to encode 21-bit characters (Unicode) as an 8-bit data stream (because that's the only thing all computers in the world can handle). Therefore, you must use the codecs module and use codecs.open(path,mode,encoding) which provides the missing bit in Python.Īs for your editor, you must check if it offers some way to set the encoding of a file. In your case, there is no such hint, hence neither your editor nor Python has any idea what is going on. This header was carefully chosen so that it can be read no matter the encoding. You have stumbled over the general problem with encodings: How can I tell in which encoding a file is?Īnswer: You can't unless the file format provides for this. As a result, we have to do a bit more work: # Python 3.x unicode_escape needs to start with a bytes in order to process the escape sequences (the other way around, it adds them) and then it will treat the resulting \xc3 and \xa1 as character escapes rather than byte escapes.

In 3.x, the string_escape codec is replaced with unicode_escape, and it is strictly enforced that we can only encode from a str to bytes, and decode from bytes to str. To get a unicode result, decode again with UTF-8. The result is a str that is encoded in UTF-8 where the accented character is represented by the two bytes that were written \\xc3\\xa1 in the original string. > print 'Capit\\xc3\\xa1n\n'.decode('string_escape') In 2.x, a string that actually contains these backslash-escape sequences can be decoded using the string_escape codec: # Python 2.x Instead, just input characters like á in the editor, which should then handle the conversion to UTF-8 and save it. We can see this by displaying the result: # Python 3.x - reading the file as bytes rather than text,

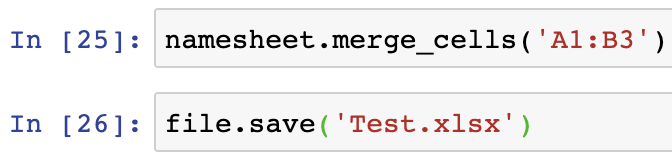

#Python open readwrite code#

Those are 8 bytes and the code reads them all. Writing Capit\xc3\xa1n into the file in a text editor means that it actually contains \xc3\xa1. \x is an escape sequence, indicating that e1 is in hexadecimal. In the notation u'Capit\xe1n\n' (should be just 'Capit\xe1n\n' in 3.x, and must be in 3.0 and 3.1), the \xe1 represents just one character.

> print > file('f3','w'), simplejson.dumps(ss) Maybe I should just JSON dump the string, and use that instead, since that has an asciiable representation! More to the point, is there an ASCII representation of this Unicode object that Python will recognize and decode, when coming in from a file? If so, how do I get it? > print simplejson.dumps(ss)

What I'm truly failing to grok here, is what the point of the UTF-8 representation is, if you can't actually get Python to recognize it, when it comes from outside. What does one type into text files to get proper conversions? What am I not understanding here? Clearly there is some vital bit of magic (or good sense) that I'm missing. So I type in Capit\xc3\xa1n into my favorite editor, in file f2. # The string, which has an a-acute in it. I'm having some brain failure in understanding reading and writing text to a file (Python 2.4).

0 kommentar(er)

0 kommentar(er)